Why bother testing?

This post was originally written while I was at LShift / Oliver Wyman

It’d be nice to be able to make a definitive case for the benefits of software tests, but I can’t due to this one question:

Is it possible to prove the correctness of a program using tests?

The answer is unfortunately “no of course not” and I’ll show why below. But all is not lost – please keep reading while I take a meander through the realms of confidence…

Imagine attempting to prove the correctness of a simple Fibonacci function using just tests (e.g. in a TDD based process) when paired with a malicious programmer – how can you specify the full range of inputs and outputs? If the input parameter is a long do you write 2^64 tests? Worse than that, in languages that allow buffer-overrun bugs, and in a function that takes an unchecked array parameter (yes, it happens), the number of input states is effectively equal to the total number of different states of the entire process address space 256^(2^64).

While writing this blog I’m reminded of something I first came across as a fresh-faced undergrad in the 80’s reading Douglas Hofstadter’s version of Zeno’s paradox in Godel Escher Bach:

Achilles told the tortoise, “If A and B, then C. You must accept that”

The tortoise says, “Yes, but if A, and B, then C, is a logical premise, then the premise you just stated, which I’ll call ‘D’ is also true. So, if A and B, and C, then D”.

Achilles relents and says “Okay, but surely you must stop there!”.

The tortoise says, “Yes, it is certainly true that I can stop there, but that would be yet another premise related which I’ll call ‘E’. If A and B and C and D, then E”.

And so on…

Frameworks like Idris or Coq attempt to be a solution but, to some, seem harder to use than just writing the software.

In any case, if absolute total correctness is what you’re interested in then completely different techniques are available. For example the take-off control systems of the space shuttle

“Take the upgrade of the software to permit the shuttle to navigate with Global Positioning Satellites, a change that involves just 1.5% of the program, or 6,366 lines of code. The specs for that one change run 2,500 pages”

that is, only 3 lines of code from a whole page of specification…

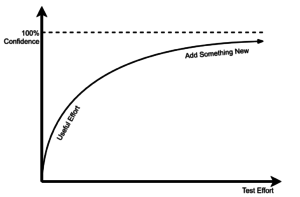

So what about the 99% of the rest of software that we all write, where the budget and incentive just isn’t there for this sort of thing. For that we can at least improve confidence as much as possible using testing as a series of layered techniques, and we have to come to terms with the fact that our confidence can only ever be an asymptotic curve at best.

But why even do tests at all? I did in fact come across a programmer once who maintained that “unit tests prove you’re a bad programmer – if you need to write tests then obviously you don’t understand your code!” Needless to say this person wasn’t exactly a team player, instead maintaining “I’ve been programming this way for 20 years and I’m not going to change now” It was a PHP shop, but we won’t hold that against them…

Tests can exists at various levels of abstraction and scope:

- Unit tests; automated

- Nearly integration tests; automated

- End to end (installed system) tests:

- Feature acceptance test; if a ticket claims to add a feature or fix a bug, then manually check that feature only

- “Poke around a bit” tests; scripted and/or a short, manual, defined sequence

- CAT / full regression smoke tests; scripted and/or a probably very long, manual, defined sequence

- Real users; manual, exploratory testing producing horribly vague bug reports (often shared with their friends rather than you)

And this last type is the real point: if you don’t do testing, and find the bugs, then your users will!

Each of these techniques, particularly given the previous, can result in more confidence, unfortunately each type tends to be far more expensive and painful to set up than the one before. End to end tests can be automated but may take a large test server farm or container system for the whole installation, and may not even be possible if there are external service dependencies. Also some tests may be of a visual UI – automated tests can only tell if the expected data objects in the UI model have the expected settings, but it takes a manual test to determine subjective usability issues.

But aren’t unit tests the most fine-grained and focused tests? Can’t we just stop with them? I think not as there is a mock/fixture chasm. I.e. to unit-test a client and server one mocks the server in the client tests and uses client-fixtures in the server tests. But this is a declaration of contract and not a test of the contract – every project I’ve ever seen (ever!) has had at least one failure in deployment because a mock and fixture didn’t agree. And here’s a philosophical question too: when is a mock so complex you’d be better building a stub service instead? Compare with the notion of a “fake”: in Freeman and Pryce and the problem of verified fakes.

So should we just get rid of unit tests (as some may suggest)? Well, again, no. Integration tests can only give vague “smoke signals” when a test fails – to find the exact location of the bug will take unit tests, interminable logging, or single-stepping with a debugger (which is the punishment for not writing unit tests). Unit tests can form essential documentation – the language Pyret even makes unit tests a first-class citizen rather than an add-on – and this is particularly true for interface tests:

“Your interface is the most vital component to test. Your interface tests will tell you what your client actually sees, while your remaining tests will inform you on how to ensure your clients see those results.”

While we’re on automated tests let’s talk about the cult of 100% code coverage. We all know that 100% coverage means nothing as we can fake coverage with stupid tests. But there’s this odd idea that “if thing X doesn’t completely prove what you want there’s no point doing it” which seems to forget that “failure of X really does show you have a problem!” 100% static code coverage certainly means nothing, but having only 10% code coverage really means a lot. There’s an asymptotic diminishing return worrying about code coverage, and do you really want to waste your time writing unit tests for accessor boilerplate etc? By the way – due to an incompatibility in JVM tooling, using Java with Lombok means it’s difficult to get more than 50% coverage in any case – which is a good thing as it highlights how annoying java is, just use Kotlin or Ceylon instead…

The technology has moved on enough now though that static code coverage measurement should be retired in favour of mutation testing e.g. pitest.org

Speaking of technology, let’s consider automated continuous integration. If you’re using source control with a sensible team policy, e.g. git with “branch per feature”, and why wouldn’t you be, then I hope you also make use of code reviews on merge/pull requests. The load on your team-mate and reviewer is greatly reduced if your software actually works! Maybe you are a genius and always remember to run the tests before pushing a commit, but most people aren’t (myself included) so having the review/CI system automatically report on tests is a valuable safety-net. Github can be configured to enforce this. Your peer-reviewer is not your debugging tool – well, unless you feel you already have too many colleagues…

In short:

- Just because you can’t get to 100% it’s still worth getting to 80% (and to recognise that 100% is possibly a waste of time)

- No single technique will solve all the problems

- In fact, using all the techniques may not solve all the problems

- Your end-of-sprint customer-facing demonstration should go flawlessly because your “Automated Demonstrations” have run through it many, many times already…

See also

- https://blog.acolyer.org/2016/10/06/simple-testing-can-prevent-most-critical-failures/

- https://docs.pact.io is an interesting approach to solving the mock/fixture chasm.